k8s部署

节点配置

192.168.1.6 k8s-master

192.168.1.8 k8s-node1

192.168.1.9 k8s-node2

处理器、内存、磁盘配置

CPU:2核

内存:4G

硬盘:50G

修改系统设置

在Ubuntu 22.04安装完毕后,我们需要做以下检查和操作:

检查网络

在每个节点安装成功后,需要通过

ping命令检查以下几项:1.是否能够

ping通baidu.com;2.是否能够

ping通宿主机;3.是否能够

ping通子网内其他节点;检查时区

时区不正确的可以通过下面的命令来修正:

1 | sudo tzselect |

根据系统提示进行选择即可;

配置ubuntu系统国内源

因为我们需要在

ubuntu 22.04系统上安装k8s,为了避免遭遇科学上网的问题,我们需要配置一下国内的源;- 备份默认源:

1 | sudo cp /etc/apt/sources.list /etc/apt/sources.list.bak |

- 配置国内源:

1 | sudo vi /etc/apt/sources.list |

内容如下:

1 | deb http://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse |

更新

修改完毕后,需要执行以下命令生效

1 | sudo apt-get update |

禁用 selinux

默认ubuntu下没有这个模块,centos下需要禁用selinux;

禁用swap

临时禁用命令:

1 | sudo swapoff -a |

永久禁用:

1 | sudo vi /etc/fstab |

将最后一行注释后重启系统即生效:

1 | /swap.img none swap sw 0 0 |

- 修改内核参数:

1 | sudo tee /etc/modules-load.d/containerd.conf <<EOF |

1 | sudo tee /etc/sysctl.d/kubernetes.conf <<EOF |

运行以下命令使得上述配置生效:

1 | sudo sysctl --system |

修改containerd配置

修改这个配置是关键,否则会因为科学上网的问题导致k8s安装出错;比如后续kubeadm init失败,kubeadm join后节点状态一直处于NotReady状态等问题;

- 备份默认配置

1 | sudo mv /etc/containerd/config.toml /etc/containerd/config.toml.bak |

- 修改配置

1 | sudo vi /etc/containerd/config.toml |

配置内容如下:

1 | disabled_plugins = [] |

- 重启containerd服务

1 | sudo systemctl enable containerd |

安装k8s组件

- 添加k8s的阿里云yum源

1 | curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add - |

- 安装k8s组件

1 | sudo apt install -y kubelet kubeadm kubectl |

可以通过apt-cache madison kubelet命令查看kubelet组件的版本;其他组件查看也是一样的命令,把对应位置的组件名称替换即可;

初始化master

- 生成kubeadm默认配置文件

1 | sudo kubeadm config print init-defaults > kubeadm.yaml |

修改默认配置

1 | sudo vi kubeadm.yaml |

总共做了四处修改:

1.修改localAPIEndpoint.advertiseAddress为master的ip;

2.修改nodeRegistration.name为当前节点名称;

3.修改imageRepository为国内源:registry.cn-hangzhou.aliyuncs.com/google_containers

4.添加networking.podSubnet,该网络ip范围不能与networking.serviceSubnet冲突,也不能与节点网络192.168.1.0/24相冲突;所以我就设置成10.10.0.0/16;

修改后的内容如下:

1 | apiVersion: kubeadm.k8s.io/v1beta3 |

- 执行初始化操作

1 | sudo kubeadm init —config kubeadm.yaml |

如果在执行init操作中有任何错误,可以使用journalctl -u kubelet查看到的错误日志;失败后我们可以通过下面的命令重置,否则再次init会存在端口冲突的问题:

1 | sudo kubeadm reset |

初始化成功后,按照提示执行下面命令:

1 | mkdir -p $HOME/.kube |

再切换到root用户执行下面命令:

1 | export KUBECONFIG=/etc/kubernetes/admin.conf |

先不要着急kubeadm join其他节点进来,可以切换root用户执行以下命令就能看到节点列表:

1 | kubectl get nodes |

但是此时的节点状态还是NotReady状态,我们接下来需要安装网络插件;

给master安装calico网络插件

- 下载kube-flannel.yml配置

1 | curl https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml -O |

修改kube-flannel.yml配置

1 | #将"Network": "10.10.0.0/16",修改为你podnetwork的网段,本文为10.10.0.0/16 |

安装kube-flannel.yml插件

1 | kubectl apply -f kube-flannel.yml |

卸载该插件可以使用下面命令:

1 | kubectl delete -f kube-flannel.yml |

flannel网络插件安装成功后,master节点的状态将逐渐转变成Ready状态;如果状态一直是NotReady,建议重启一下master节点;

接入两个工作节点

我们在master节点init成功后,会提示可以通过kubeadm join命令把工作节点加入进来。我们在master节点安装好calico网络插件后,就可以分别在两个工作节点中执行kubeadm join命令了:

1 | kubeadm join 192.168.1.6:6443 --token ui3x3h.n3op589vk67med1t --discovery-token-ca-cert-hash sha256:ecd2d64f701233c28f78ce3406b0f8beb75acc4bbf7cebc25d862196e95a486f |

如果我们忘记了master中生成的命令,我们依然可以通过以下命令让master节点重新生成一下kubeadm join命令:

1 | sudo kubeadm token create --print-join-command |

我们在工作节点执行完kubeadm join命令后,需要回到master节点执行以下命令检查工作节点是否逐渐转变为Ready状态:

1 | kubectl get nodes |

如果工作节点长时间处于NotReady状态,我们需要查看pods状态:

1 | sudo kubectl get pods -n kube-system |

查看目标pod的日志可以使用下面命令:

1 | kubectl describe pod -n kube-system [pod-name] |

当所有工作节点都转变成Ready状态后,我们就可以安装Dashboard了;

安装Dashboard

准备配置文件

可以科学上网的小伙伴可以按照github上的文档来:github.com/kubernetes/…,我选择的是2.7.0版本;

不能科学上网的小伙伴就按照下面步骤来,在master节点操作:

1 | sudo vi recommended.yaml |

文件内容如下:

1 | # Copyright 2017 The Kubernetes Authors. |

安装

通过执行以下命令安装Dashboard:

1 | kubectl apply -f recommended.yaml |

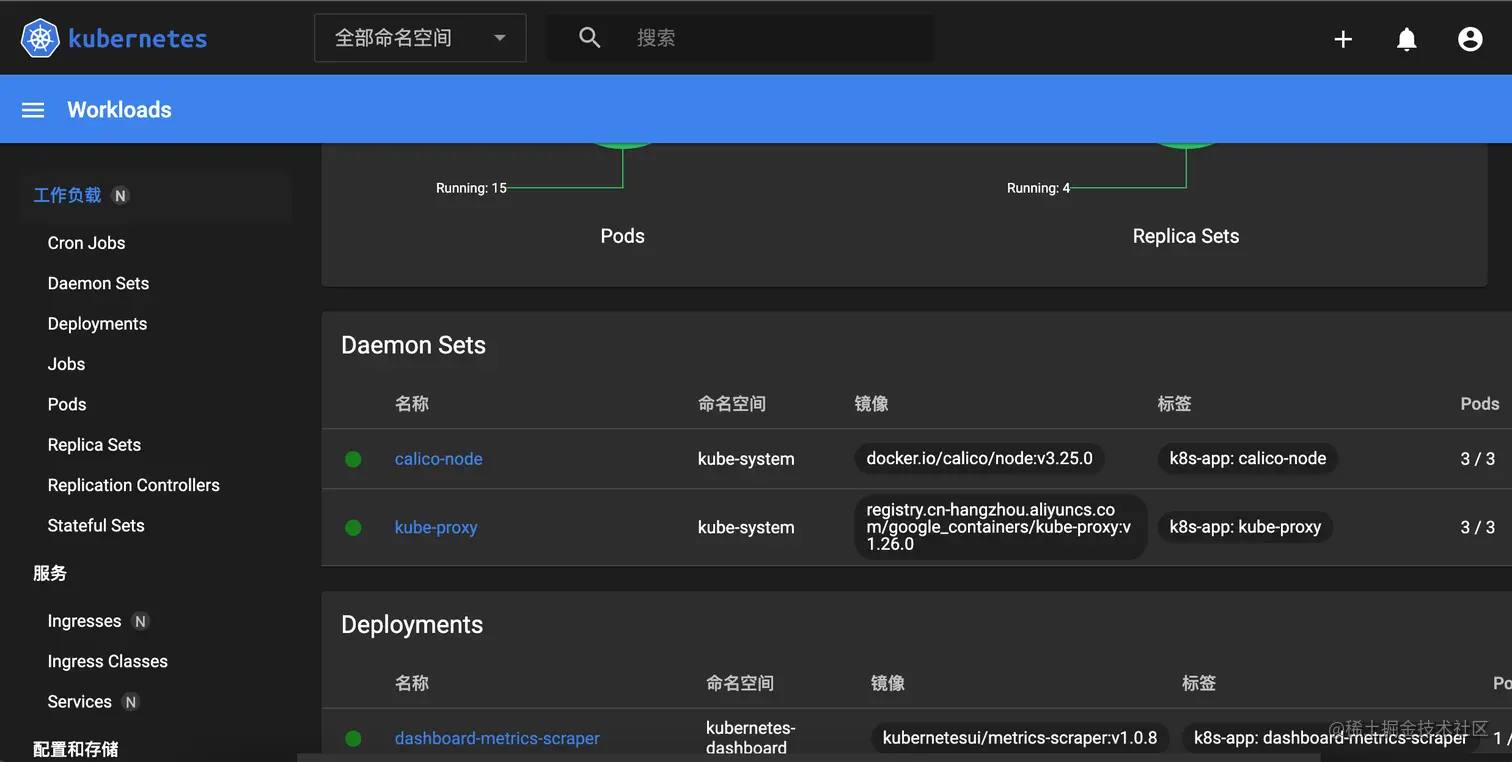

此时就静静等待,直到kubectl get pods -A命令下显示都是Running状态:

kubernetes-dashboard dashboard-metrics-scraper-7bc864c59-tdxdd 1/1 Running 0 5m32s

kubernetes-dashboard kubernetes-dashboard-6ff574dd47-p55zl 1/1 Running 0 5m32s

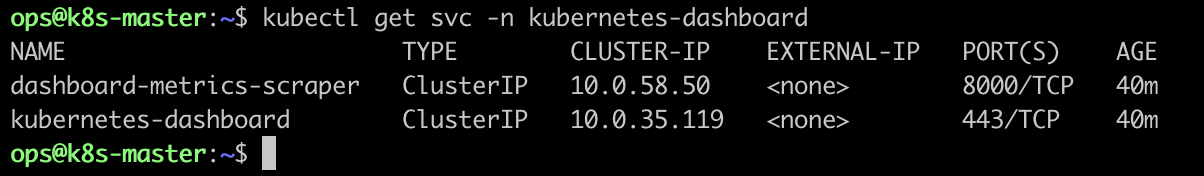

- 查看端口

1 | kubectl get svc -n kubernetes-dashboard |

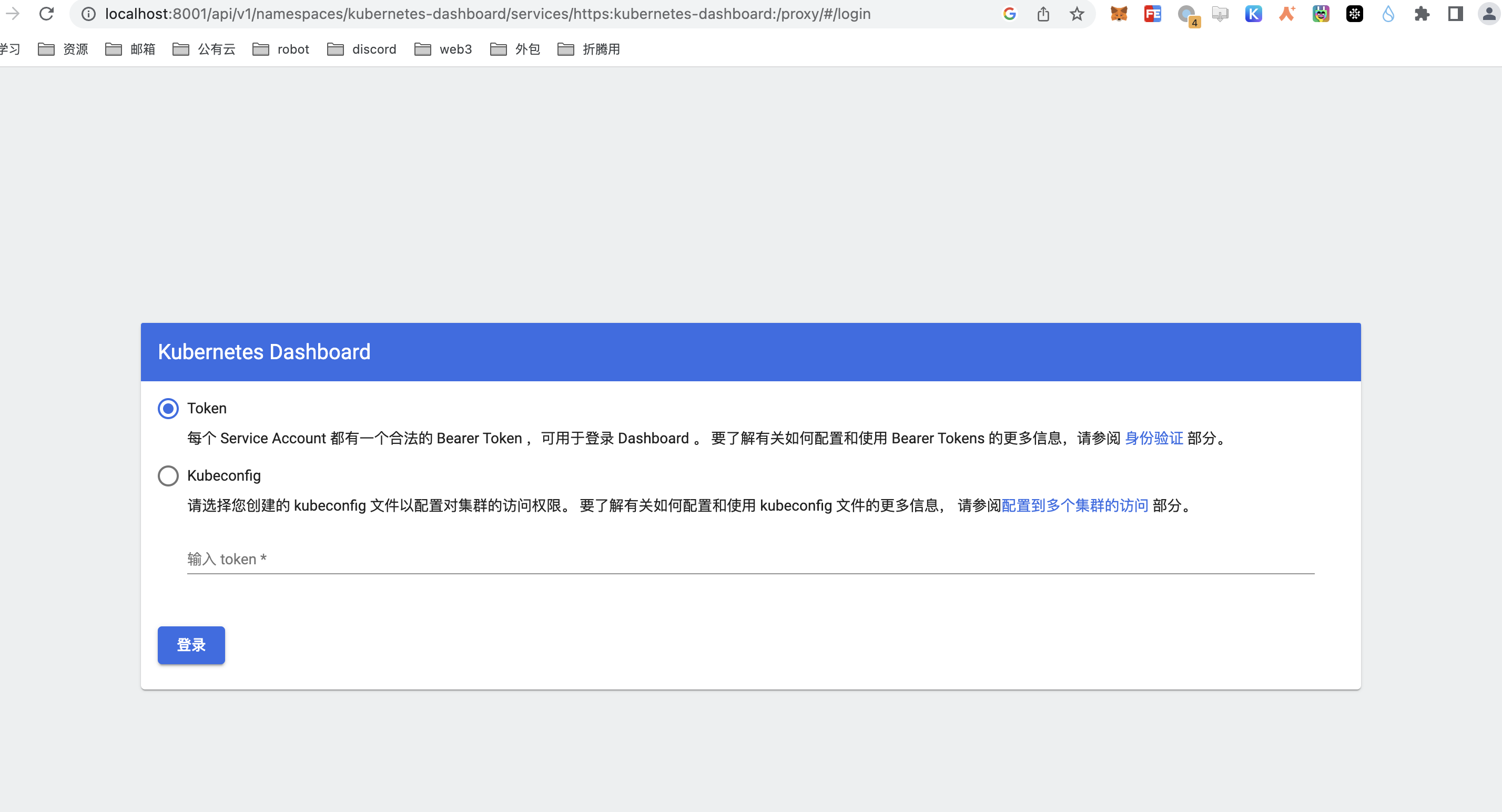

- 浏览器访问Dashboard

1

2因为不能直接访问dashboard,因此使用proxy

kubectl proxy

使用Token登录

生成Token

在master节点中执行下面命令创建

admin-user:

1 | sudo vi dash.yaml |

配置文件内容如下:

1 | apiVersion: v1 |

接下来我们通过下面命令创建admin-user:

1 | kubectl apply -f dash.yaml |

日志如下:

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

这就表示admin-user账号创建成功,接下来我们生成该用户的Token:

1 | kubectl -n kubernetes-dashboard create token admin-user |

我们把生成的Token拷贝好贴进浏览器对应的输入框中并点击登录按钮:

至此,我们就在ubuntu 22.04上完成了k8s的安装。

备注:

参考文档里面有进行安装docker,但是我查了官方文档,k8s不需要同时安装docker跟containerd,只要一个容器运行时就行。我这边选用containerd。

参考文档: